Solana Validator 101: Transaction Processing

Welcome to the first article in Jito Lab’s series on the Solana validator. In this article, we’ll do a technical deep dive on the lifecycle of a transaction as it moves from an unsigned transaction to a propagated block.

If you want to follow along in code, we’re working off commit cd6f931223. This is the master branch as of November 21, 2021, so validators are likely running an older version of the code on this date.

There is a lot of activity in this codebase, so always check out the Solana Github for the most up-to-date code. Load it up in your favorite IDE and click through definitions with the article to poke around.

Jito Labs is building MEV infrastructure for Solana. If reverse engineering, hacking on code, or building high performance systems interests you, we’re hiring engineers! Please DM @buffalu__ on Twitter.

So you’re aping into some coins on Serum or Mango. You sign the transaction in your wallet and your transaction is quickly confirmed with a notification. What just happened?

- The dapp built a transaction to buy some amount of tokens.

- The dapp sent the transaction to your wallet (Phantom, Sollet, etc.) to be signed.

- The wallet signed the transaction using your private key and sends it back to the dapp.

- The dapp took the signed transaction and uses the sendTransaction HTTP API call to send the transaction to the current RPC provider specified in the dapp.

- The RPC server sent your transaction as a UDP packet to the current and next validator on the leader schedule.

- The validator’s TPU receives the transaction, verifies the signature using CPU or GPU, executes it, and propagates it to other validators in the network.

Solana has a known leader schedule generated every epoch (~2 days), so it sends transactions directly to the current and next leader instead of gossiping transactions randomly like the Ethereum mempool does. Read more about the comparison here.

Let’s go deeper.

Transaction Processing Unit

At this point, the RPC service that our dapp is using has received the transaction over HTTP, converted the transaction into a UDP packet, looked up the current leader’s info using the leader schedule, and has sent it to the leader’s TPU.

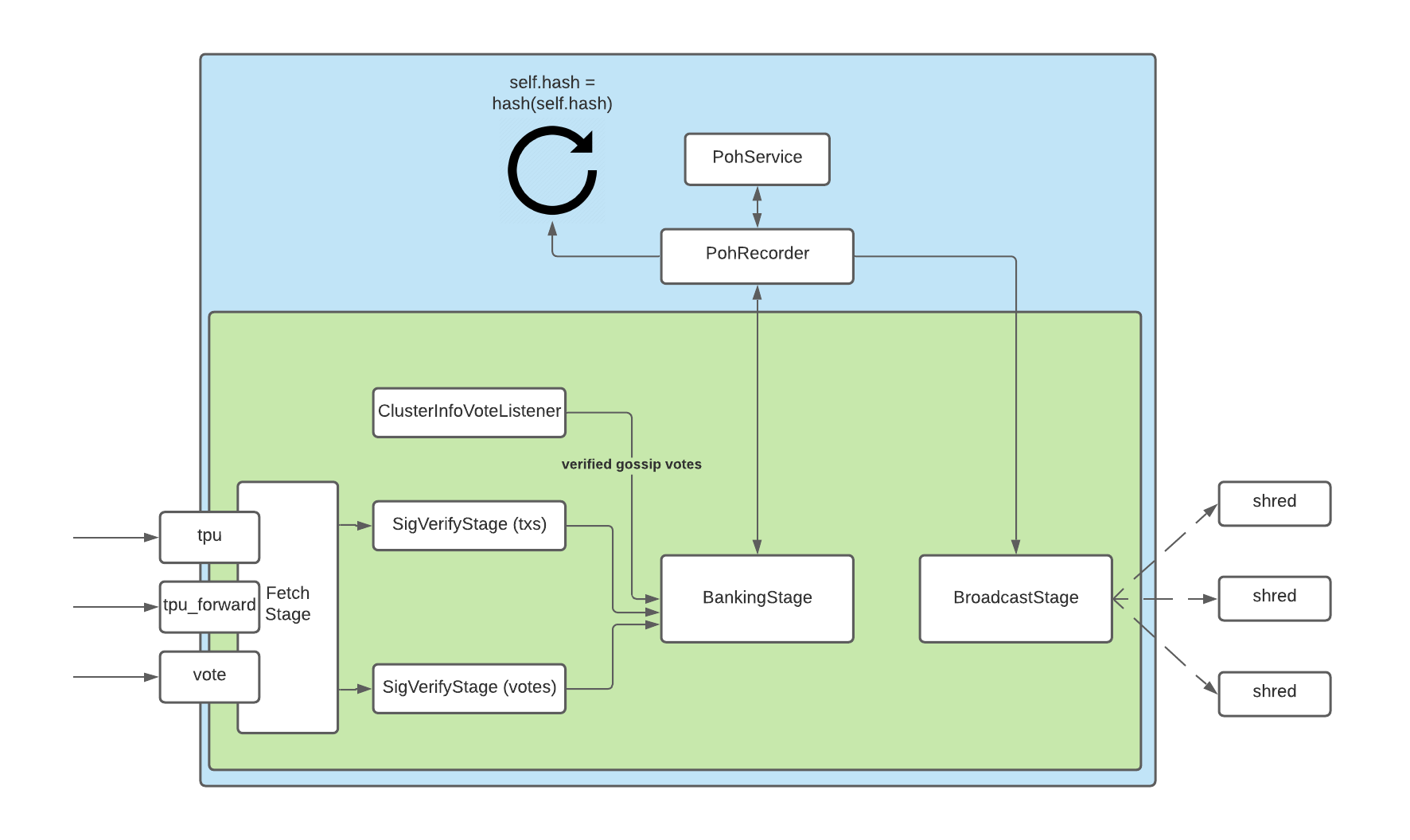

So what is this TPU thing? The transaction processing unit (TPU) processes transactions! It leverages message queues (Rust channels) to build a software pipeline consisting of multiple stages. The output of stage n is hooked into stage n + 1.

The Solana validator is unique compared to other blockchains because it relies on UDP packets to communicate with each other. UDP is a connectionless protocol and doesn’t guarantee order or delivery of messages. This removes potential TCP back-pressure issues and connection management overhead while keeping server and networking setup simple for validator operators. However, this limits transaction and gossip message sizes, increases DOS-ability of validators, and may cause transactions and other packets to get dropped.

The FetchStage has dedicated sockets for each packet type:

tpu: normal transactions (Serum orders, NFT minting, token transfers, etc.)tpu_vote: votestpu_forwards: if the current leader can’t process all transactions, it forwards unprocessed packets to the next leader on this port.

Packets are batched into groups of 128 and forwarded to the SigVerifyStage.

The sockets mentioned above are created here and stored in the ContactInfo struct. ContactInfo contains information on the node and is shared with the rest of the network through a gossip protocol.

The SigVerifyStage verifies signatures on packets and marks them for discard if they fail. Reminder as far as the software is concerned, these are still packets with some metadata; it still doesn’t know if these are transactions or not.

If you have a GPU installed, it will use it for signature verification. It also contains some logic to handle excessive packets in the case of higher load which uses IP addresses to drop packets.

Votes and normal packets run in two separate pipelines. Packets arriving in the vote pipeline are filtered using simple logic to determine if the packet is a vote or not — this should help prevent DOS on the vote pipeline. This is another mitigation to avoid another network outage similar to the one earlier this year.

This is the meat of the validator and the hardest section to understand, at least from what we’ve reviewed so far ;)

There are three packet types being sent to this stage:

- Verified gossip vote packets

- Verified tpu_vote packets

- Verified tpu packets (normal transactions)

Each packet type has its own processing thread and the normal transaction pipeline has two threads.

When the current node becomes a leader, all packets in the TPU go through the following steps:

- Deserialize the packet into a

SanitizedTransaction. - Run the transaction through a Quality of Service (QoS) model. This selects transactions to execute depending on a few properties (signature, length of instruction data bytes, and some type of cost model based on access patterns for a given program id).

- The pipeline then grabs a batch of transactions to be executed. This group of transactions are greedily selected to form a parallelizable entry (a group of transactions that can be executed in parallel). In order to do this, it uses the isWriteable flag that clients set when building transactions and a per-account read-write lock to ensure no data race conditions.

- Transactions are executed.

- The results are sent to the PohService and then forwarded to the broadcast stage to be shredded (packetized) and propagated to the rest of the network. They are also saved to the bank and accounts database.

Transactions that aren’t processed will be buffered and retried on the next iteration. If a validator receives transactions a short time before it’s time to produce blocks, it will hold them until it becomes a leader. If the leader n time is up and there are still unprocessed transactions, it will forward the unprocessed transactions to the TPU forward port of leader n + 1 .

Parallel Processing

One of Solana’s spicy features is the runtime’s ability to parallelize transaction processing enabled by the programming model’s separation of code and state (known as Sealevel). Transaction instructions are required to explicitly mark what accounts will be read from and written to.

This can be a huge pain in the ass for developers, but it’s for a good reason — it allows the BankingStage to execute batches of transactions in parallel without running into concurrency issues.

Transactions are processed in batches. The entries produced by each batch contain a list of transactions that can be executed in parallel. Therefore, we need RW locking in each batch in addition to batches processed in other parallel pipelines.

Let’s walk through a few examples. In reality, transactions will access multiple accounts. For simplicity’s sake, imagine each box is a transaction that is accessing one account.

In the above example, account C is the only account shared between the two batches in the two separate pipelines. One batch is attempting to read from C and another attempting to write. In order to avoid any race conditions, the validator uses a read-write lock to ensure no data corruption. In this case, the top pipeline locks C first and starts execution. At the same time, the bottom pipeline attempts to execute the batch, but C is already locked, so it executes everything except for C and saves it for the next iteration.

In this example, there are multiple transactions which contain reads and writes to account C. Since the entries propagated to block producers need to support parallel execution, it will grab the first access to C in addition to the other transactions after that follow the RW Lock rules.

Proof of History (PoH) is Solana’s technique for proving the passage of time. It is similar to a verifiable delay function (VDF). Read more about it here.

The PohService is responsible for generating ticks which are units of time smaller than a slot (1 slot = N ticks). This is pinned to a processor core and runs a hash loop like:output = "solana summer"

output = "solana summer"

while 1:

output = hash([output])Hashes will be generated from themselves until a Record is received from the BankingStage, at which point the current hash and mixin (a hash of all transactions in the batch) are combined. These records are converted to Entries so that they can be broadcasted to the network via the BroadcastStage. The pseudo-code ends up looking something like

output = "solana summer"

record_queue = Queue()

while 1:

record = record_queue.maybe_pop()

if record:

output = hash([output, record])

else:

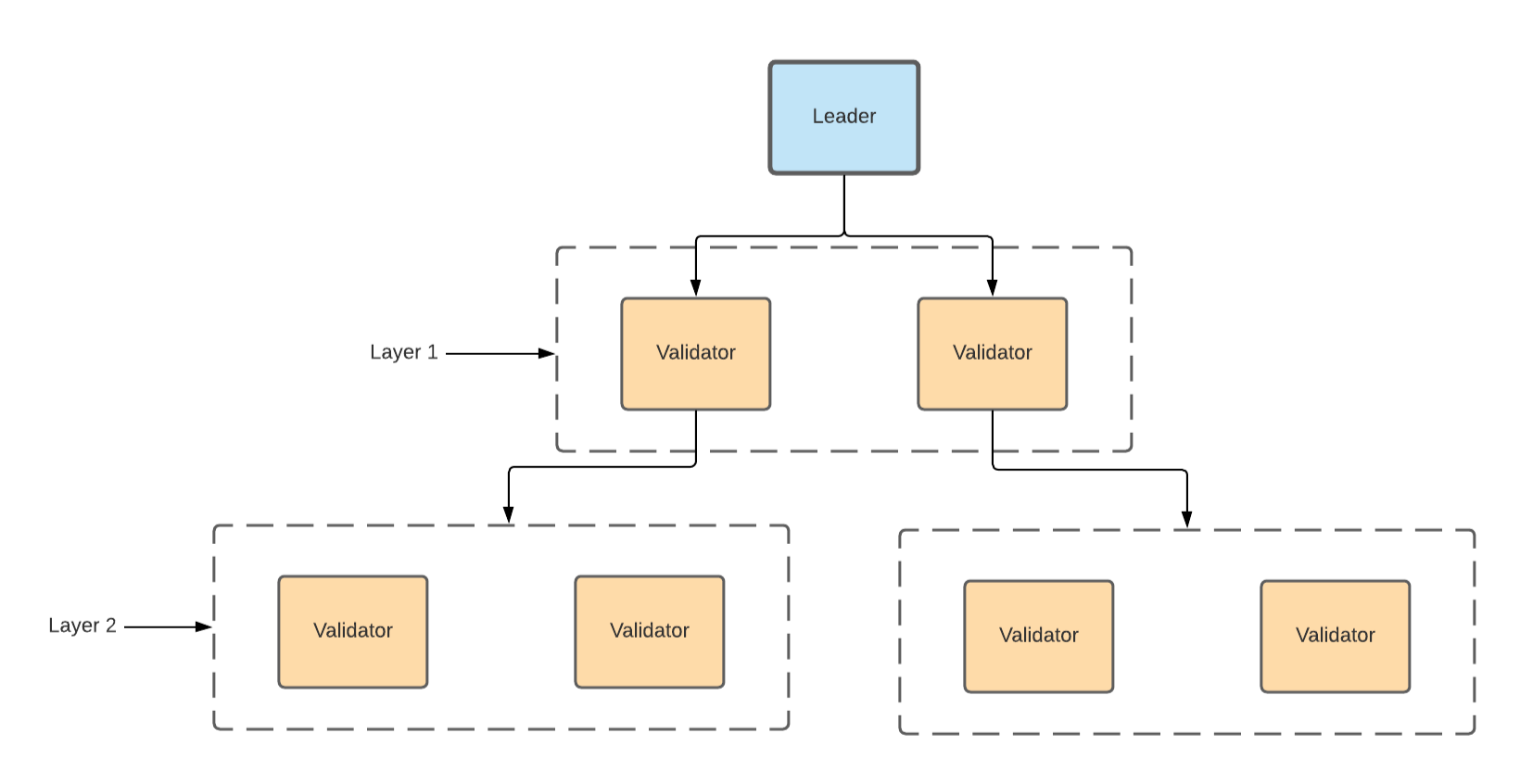

output = hash([output])This stage is responsible for broadcasting Entries generated by PohService to the rest of the network. These entries are converted to Shreds, which represent the smallest unit of a block and then sent to the rest of the network using a block propagation technique called Turbine. The tl;dr is that each node receives a partial view of the block from its parent node (up to 64KB packet size), and shares it with its own children and so on. It looks something like this:

On the receiving side, validators listen for shreds and convert them back into entries and then blocks. The details of that will be in a future article.

Thank You

As you may be able to tell, the validator is a very complex piece of engineering and we have just scratched the surface. It takes inspiration from operating systems and one can tell there are lots of systems-level tricks the team uses to increase the performance. Big kudos to the Solana engineering team and contributors for this amazing piece of engineering.

Stay tuned for our next pieces where we go more in depth into the challenges of building a more democratic MEV system on Solana.

Thank you to the Solana engineers for help in Discord and thank you for reading. Please follow @jito_network, @buffalu__, and @segfaultdoctor on Twitter to stay tuned for our next articles.

If any of this is interesting to you, we’re hiring! Please DM @buffalu__ on Twitter.